Our Key Criteria and Radar report research process takes approximately 17 weeks from kickoff to publication. Figure 2 shows the key stages of the GigaOm research process.

WHAT ARE THE STEPS INVOLVED IN THE GIGAOM KEY CRITERIA AND RADAR RESEARCH PROCESS?

Key Stages of the GigaOm Research Process

01 Plan Project

- Define scope of research

- Build vendor list

- Send pre-briefing campaign

- Submit deliverables for internal review

02 Gather Data

- Send vendor questionnaires

- Conduct briefings with vendors

- Perform desk research

05 Fact Check

Vendors are invited to review product write-up and scoring for factual inaccuracies

07 Courtesy Preview & Publish

Courtesy preview cop sent to vendors five business davs before sheduled publish date

1. Plan Project

Projects start with a structured planning and review process. In partnership with the research lead, analysts draw from their expertise in the field and rigorous secondary desk research to build an initial picture of the market and the vendor solutions within it.

Vendors that may be a fit for the report are alerted to the upcoming research as part of a pre-briefing campaign several weeks in advance of the briefing window. This campaign asks vendors to confirm their contact information, informs them when the briefing window will occur, and lets them know the analyst is working on refining the scope and inclusion criteria. During the research planning stage, analysts may determine that some vendors that were part of the initial pre-briefing campaign are not a good fit for the report, in which case those vendors are notified and removed from further consideration.

Analysts then set the scope of the report, the inclusion criteria, and the decision criteria that will be used to rate solutions. The proposed content then undergoes extensive internal review to ensure it’s reflective of the market and follows GigaOm’s methodology.

The relationship among table stakes, key features, and emerging features is central to our Key Criteria and Radar reports. These features evolve over time, with emerging features that become broadly adopted evolving into key features, while many key features become so ubiquitous that they graduate to table stakes.

2. GATHER DATA

The data gathering stage is twofold:

- The first step of Data Gathering is GigaOm sends vendors a tailored questionnaire to help analysts understand and evaluate their offering and invites vendors to schedule a briefing to confirm analyst understanding and explore recent changes and knowledge gaps. We kindly request that vendors submit their answered questionnaire before the briefing so analysts have time to review before the call and can focus the conversation on exploring any remaining knowledge gaps. During the briefing, the analyst takes detailed notes and may record the presentation. GigaOm does not provide a copy of the recording to participating vendors. The briefing window lasts 15 business days.

- Second, analysts conduct independent desk research on vendors, reviewing publicly available information on vendor websites to validate vendor claims and analysts’ own preliminary findings.

During the data gathering stage, analysts may determine that some vendors are not a good fit for the report, in which case those vendors are notified and removed from further consideration.

3. CONSOLIDATE FINDINGS: SCORE SOLUTIONS, CREATE RADAR GRAPHIC, AND DRAFT RADAR REPORT

For scoring key features, emerging features, and business criteria in Radar reports, GigaOm uses a 0 to 5 scale, where 0 means the decision criterion is not available, 1 indicates the feature is poorly executed, and 5 means the feature is executed exceptionally well.

The five-point scale breaks down as follows:

Significantly exceeds expectations. Outstanding execution sets the vendor’s implementation apart from others in the sector.

Exceeds expectations and provides significant value.

Meets expectations but does not stand out among competition in the sector.

Lags behind expectations. May fulfill some aspects of the criterion, but fall short of delivering expected value.

Fails to meet expectations and falls behind others in the sector.

Lacks support for the criterion.

GigaOm also applies a standard weighting for decision criteria—weightings are determined by a holistic assessment of the net impact the decision criteria have on solution value:

Key features and business criteria receive the highest weighting and have the most significant impact on vendor positioning in the Radar chart. Emerging features receive a lower weighting and have a commensurately lower impact on vendor positioning in the Radar chart.

Market segment, deployment model, and additional use cases/buyer requirements that may be relevant to a purchase decision are evaluated in a binary yes/no and are not weighted; as such, these items do not factor into a vendor’s designation as Leader, Challenger, or Entrant on the Radar chart.

Table stakes act as inclusion criteria for the Radar report and are not scored or weighted; as such, table stakes do not factor into a vendor’s position on the Radar chart outside of whether or not the vendor will appear in the report (vendors must meet ALL table stakes to be eligible for inclusion in the Radar report).

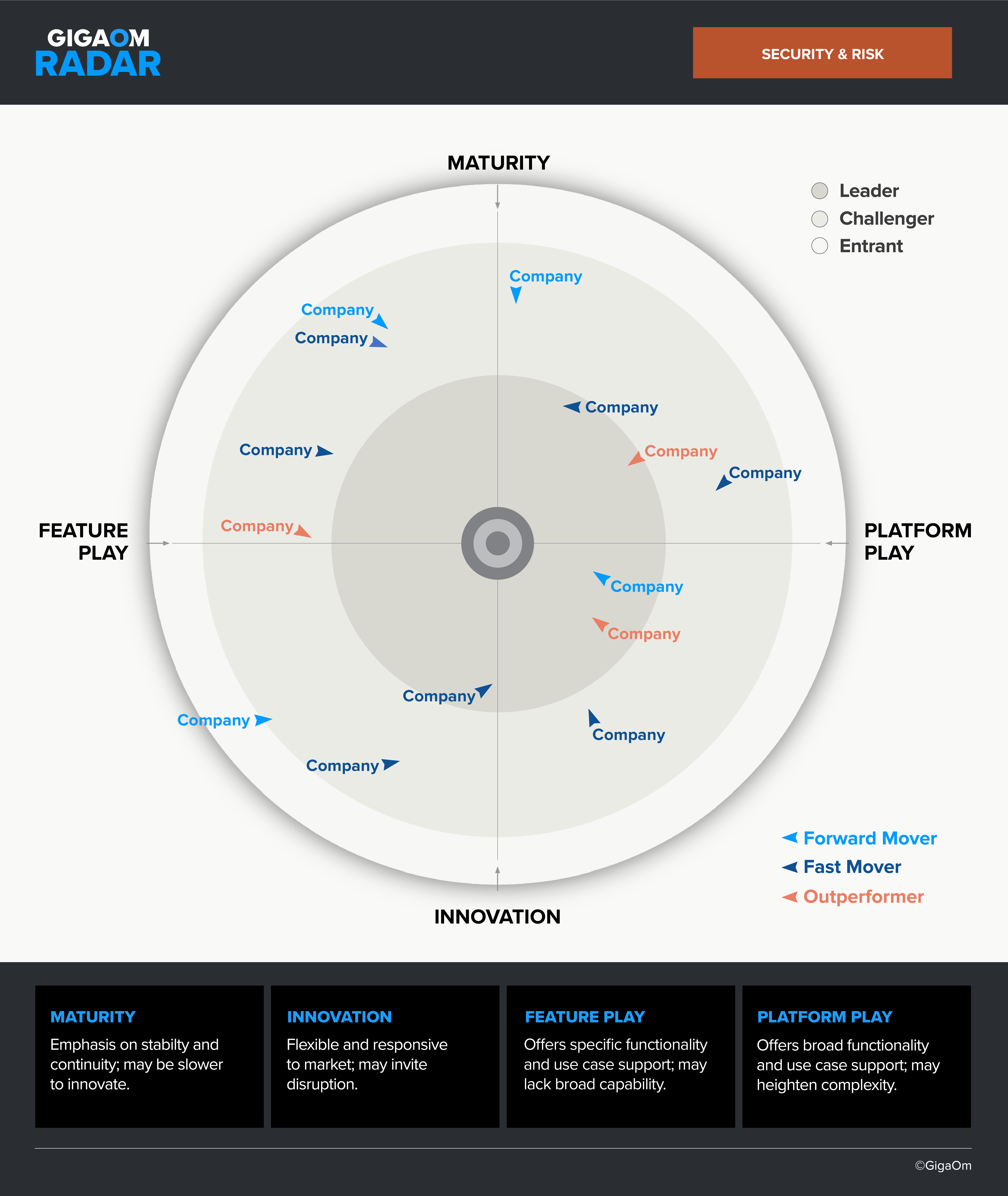

The analyst scores each solution, and solutions are plotted on the GigaOm Radar chart based on their aggregate scores and relative to the other vendors in the evaluation, as shown. Higher-scoring solutions are positioned closer to center across the Leader, Challenger, and Entrant tiers.

The Radar offers a forward-looking assessment, plotting the current position and projected advancement of each solution over a 12- to 18-month window. Color-coded arrowheads indicate progress based on assessment of strategy and pace of execution, with vendors designated as Forward Movers, Fast Movers, or Outperformers based on their rate of progress (organizational and technological change) compared to the market.

Forward Movers are making below-average progress in the market, Fast Movers are making average progress, and Outperformers are making above-average progress.

Note that the Radar is technology-focused, and business considerations such as vendor market share, customer share, spend, recency or longevity in the market, and so on are not considered in our evaluations. As such, these factors do not impact scoring and positioning on the Radar graphic.

The analyst also evaluates the scope and character of each solution to determine placement in one of the four quadrants of the Radar chart. Each solution is scored using a 0 to 10 scale on four distinct poles—Feature Play and Platform Play (left and right), and Maturity and Innovation (top and bottom). The higher the score, the more strongly a solution hews to that specific pole. Analysts work from an established checklist that identifies the characteristics of each pole to help guide their scoring.

The four poles (and two axes) of the Radar chart are as defined as follows:

The Y axis comprises the Maturity and Innovation poles and addresses how the vendor tends to approach the market.

Emphasizes stability and continuity; may be slower to innovate. Vendors with a strong Maturity score will tend to be methodical and structured in their approach, valuing incremental improvement, consistent user experience, and assured compatibility over breakneck advancement. These vendors will minimize volatility.

Flexible and responsive to market; may invite disruption that results in customer fatigue. Vendors with a strong Innovation score will tend to emphasize rapid advancement and an aggressive roadmap, which may result in breaking change.

The horizontal X axis comprises the Feature Play and Platform Play poles and depicts the relative depth or breadth of an offering:

Focus on specific functionality and use cases; may lack broad capability. Feature Play solutions are typically more targeted and have greater depth of features for specific scenarios. They may be point solutions designed to serve a specific customer need or use case to a high level of completion or they may target a specific industry or vertical.

Broad functionality and use case support; may heighten complexity. Platform Play solutions are more generalized and have a greater breadth of features that cut across many use cases; they’re designed to address problems holistically. Platform Play offerings should apply to a wide range of organizations, industries, and user requirements.

4. INTERNAL REVIEW

Every GigaOm Radar and Key Criteria report is subject to formal reviews to verify research scope, establish criteria for assessment, and assure quality of analysis. Reviews include a technical review (conducted by the research lead), a peer review (conducted by a separate analyst who is also a subject matter expert in the area), and a quality review (conducted by the research lead and the content team). These reviews are performed at both the early research scoping stage and later draft submission stage, ensuring the research adheres to our values and meets product expectations.

5. FACT CHECK

Every GigaOm Radar report goes through a fact check stage, and all vendors evaluated in the Radar are invited to participate in a fact check review. During the fact-check review process, we provide each vendor a link to the in-progress report with the write-ups and scoring for other vendors removed.

In scope for fact check:

- Vendors are asked to note statements of factual inaccuracy related to their scores and product write-up only.

- Vendors should note statements of factual inaccuracy by leaving a comment in the fact check doc, and feedback within the comment should be limited to ~100 words.

- Vendors may request revisions to correct factual inaccuracies related to their scores and/or product write-up provided they offer supporting documentation to the analyst for review that shows the feature is generally available (not in beta or preview) at the time of fact check.

Out of scope for fact check:

- Comments or requested rewrites to the main sections of the report (Executive Summary, Market Categories and Deployment Types, Decision Criteria Comparison, and Analyst’s Overview) will not be considered.

- Comments or issues with other vendors’ placement on the Radar graphic will not be considered.

- Requested rewrites that do not address a factual inaccuracy and attempt to insert promotional language or marketing copy will not be considered.

- Marketing literature is not considered suitable evidence at fact check.

If feedback is received that does not follow this guidance, the response may be discarded and vendors will be given one opportunity to resubmit.

The Radar chart shared with vendors at fact check reflects our findings based on data gathered during briefings and/or desk research. It is subject to change and not a final chart. Every vendor is invited to participate in this process and request corrections of factual inaccuracies. If scores for key features, emerging features, or business criteria change as a result of corrections, it will impact positioning of vendors in the final chart.

The fact check review period typically lasts five business days, after which the analyst takes five business days to review vendor feedback and make adjustments as needed to correct inaccuracies.

Note that revision requests and documentation received outside the five-day fact check review window are not guaranteed inclusion in the final report. Additionally, at this stage, any requests for briefings fall outside of our standard process. In both cases (late vendor feedback and/or late requests for briefings), such requests or subsequent escalations are not guaranteed and are instead considered on a case-by-case basis and require approval from GigaOm’s executive leadership.

6. REFINE AND DELIVER: FINALIZE SCORING, RADAR GRAPHIC, AND RADAR REPORT

Following the fact check review period, the analyst takes five business days to respond to feedback and update scores, the Radar graphic, and product write-ups as needed to correct factual inaccuracies. These deliverables are then considered final and the report is moved into our publishing queue.

7. COURTESY PREVIEW AND PUBLICATION

Prior to publication, GigaOm sends all vendors included in the GigaOm Radar a courtesy preview of the final report. The preview period typically lasts five business days, during which vendors can draft marketing materials (press releases, social posts, and so forth) to share with the GigaOm marketing team for review in advance of the report’s publication.

Importantly, the preview period is not an extension of our fact check review process, and GigaOm does not accept new information from vendors or escalation during this time. The content of the Radar report is considered to be final at this point.