Table of Contents

- Executive Summary

- Data Pipeline Sector Brief

- Decision Criteria Analysis

- Analyst’s Outlook

- Methodology

- About Andrew Brust

- About GigaOm

- Copyright

1. Executive Summary

Data pipelines are solutions that manage the movement and/or transformation of data, readying it for storage in a target repository and preparing it for use in an organization’s various analytic workloads. Data pipelines automate repetitive data preparation and data engineering tasks, and they establish the value of an organization’s analytical insights by ensuring the quality of its data.

There are a number of frameworks and/or modes according to which data pipelines can operate. These include:

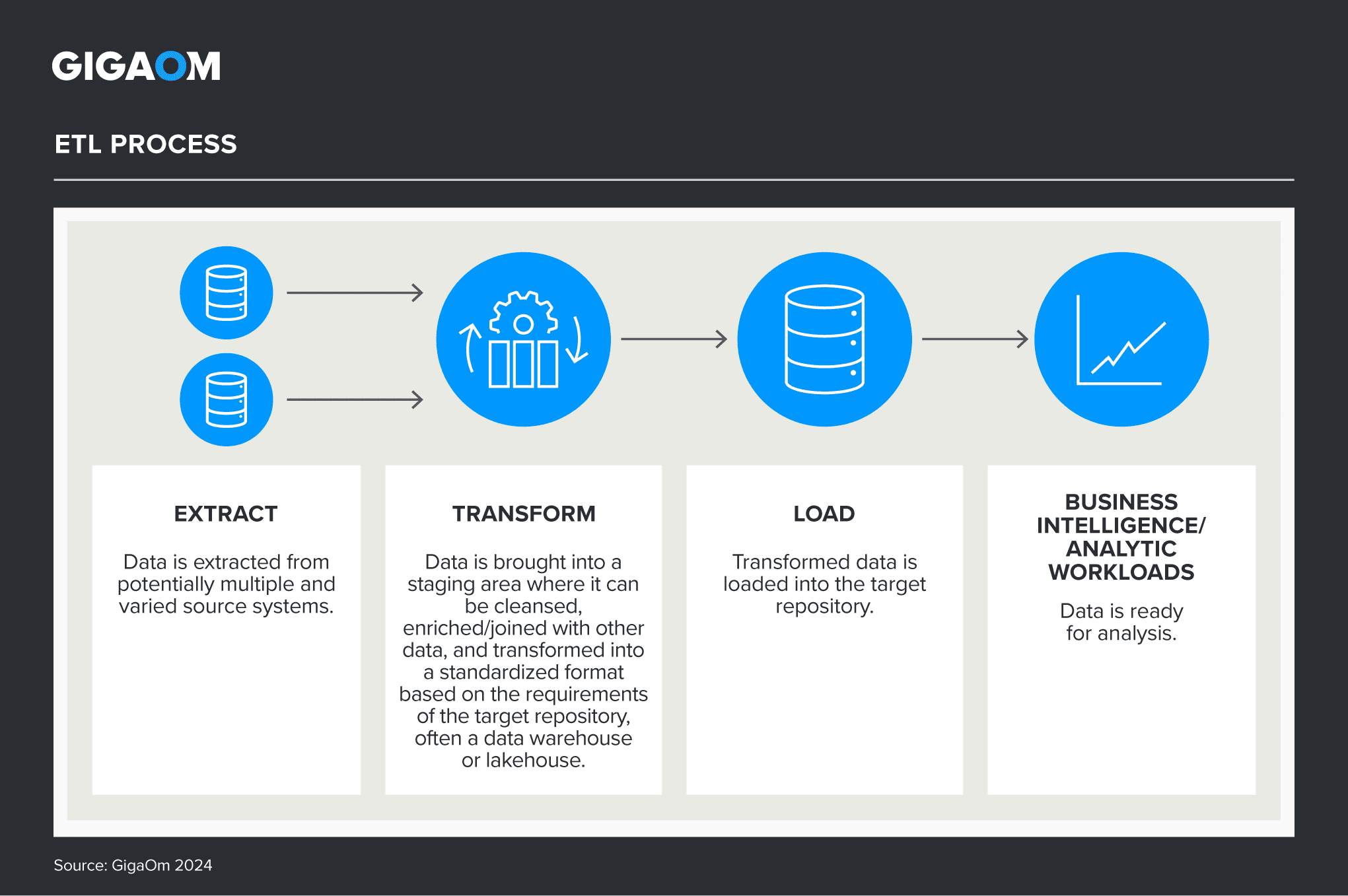

- ETL (extract, transform, load): Data is first extracted from its source system(s) and consolidated; next, any number of transformations are applied, based on the envisioned analysis and requirements of the intended destination system; and finally, the transformed data is loaded into the target system and stored and persisted accordingly. The overall category of data pipelines originates from this framework. (See Figure 1.)

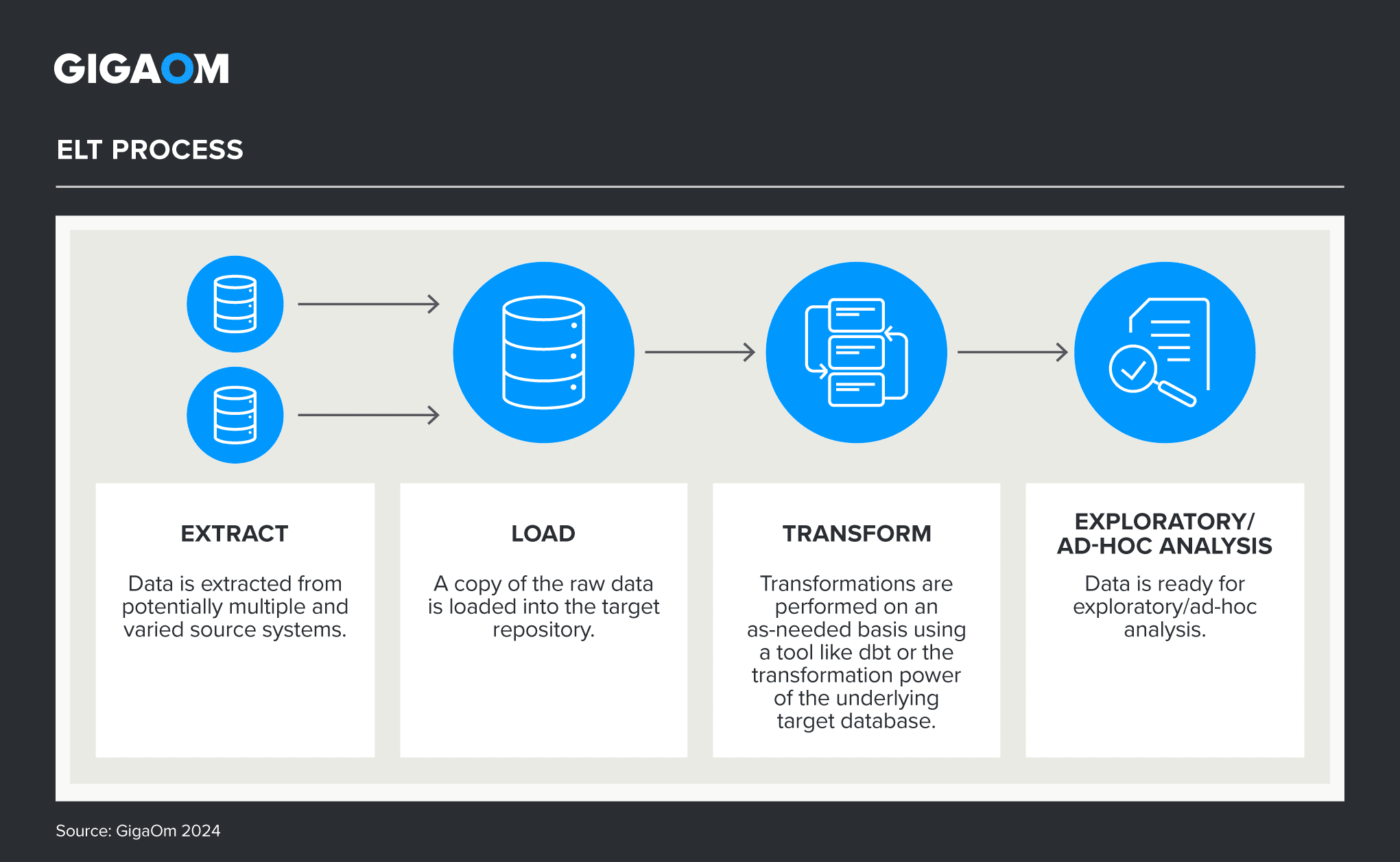

- ELT (extract, load, transform): Data is first extracted as-is from its source system and loaded into a target system, remaining unmodified as it moves. Transformations are applied to the data after it has been loaded—often by leveraging a tool such as the data build tool (dbt). Transformations can be applied and reapplied to the raw data on an as-needed basis, without any need to re-ingest the data. (See Figure 2.)

- CDC (change data capture): Changes to data and metadata are continuously captured, in real-time or near-real-time increments, and replicated from source to destination. This continuous approach to data movement differentiates CDC from the ETL and ELT frameworks, through which data extraction and movement is often (but not always) done in batches, at scheduled intervals, or on demand.

Today’s data pipeline solutions can support one or a combination of these modes.

Figure 1: ETL Process

Figure 2: ELT Process

Business Imperative

Building a data pipeline from scratch is time-consuming and code-intensive. Data engineers have often disproportionately shouldered the burden of building and maintaining custom pipelines, along with much of the related data transformation and data preparation tasks. Since today’s organizations must work with immense volumes of data from a great variety of sources, the magnitude of the resulting work required can stretch resources to the limit.

Data pipeline tools have evolved to meet these needs. These platforms automate the creation and maintenance of pipelines, and they automate the repetitive tasks involved in cleansing and preparing data. They also allow the automatic scheduling of loads and tasks to run at predetermined intervals. In doing so, data pipeline solutions offer the potential to increase efficiency, improve collaboration among technical and non-technical users, and reduce complexity and redundancy.

Sector Adoption Score

To help executives and decision-makers assess the potential impact and value of a data pipeline solution deployment to the business, this GigaOm Key Criteria report provides a structured assessment of the sector across five factors: benefit, maturity, urgency, impact, and effort. By scoring each factor based on how strongly it compels or deters adoption of a data pipeline solution, we provide an overall Sector Adoption Score (Figure 3) of 4.2 out of 5, with 5 indicating the strongest possible recommendation to adopt. This indicates that a data pipeline solution is a credible candidate for deployment and worthy of thoughtful consideration.

The factors contributing to the Sector Adoption Score for data pipelines are explained in more detail in the Sector Brief section that follows.

Key Criteria for Evaluating Data Pipeline Solutions

Sector Adoption Score

Figure 3. Sector Adoption Score for Data Pipelines

This is the third year that GigaOm has reported on the data pipeline space in the context of our Key Criteria and Radar reports. This report builds on our previous analysis and considers how the market has evolved over the last year.

This GigaOm Key Criteria report highlights the capabilities (table stakes, key features, and emerging features) and nonfunctional requirements (business criteria) for selecting an effective data pipeline solution. The companion GigaOm Radar report identifies vendors and products that excel in those capabilities and metrics. Together, these reports provide an overview of the category and its underlying technology, identify leading data pipeline offerings, and help decision-makers evaluate these solutions so they can make a more informed investment decision.

GIGAOM KEY CRITERIA AND RADAR REPORTS

The GigaOm Key Criteria report provides a detailed decision framework for IT and executive leadership assessing enterprise technologies. Each report defines relevant functional and nonfunctional aspects of solutions in a sector. The Key Criteria report informs the GigaOm Radar report, which provides a forward-looking assessment of vendor solutions in the sector.